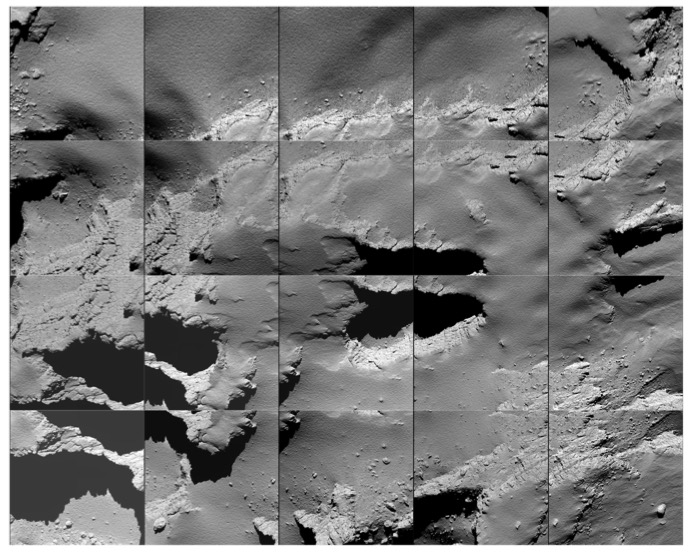

Here’s a sequence of images captured by Rosetta’s OSIRIS narrow-angle camera during its descent to the surface of Comet 67P/Churyumov-Gerasimenko on 30 September.

Mosaic of 20 images from the OSIRIS narrow-angle camera collected during the Rosetta’s final descent on 30 September. Credit: ESA/Rosetta/MPS for OSIRIS Team MPS/UPD/LAM/IAA/SSO/INTA/UPM/DASP/IDA

The last images in the first and second row (on the right) are published individually as Comet landing descent image – 5.8 km and Comet landing descent image – 5.7 km, respectively.

Rosetta’s target impact region is visible in the lower right part of the last image in the second row, as well as in the upper right part of the last image in the third row.

Note: details added to this post on 2 October.

Discussion: 49 comments

Congratulations to all on a very successful mission.

Where too next?

How about Didymos?

https://youtu.be/h4lpu8HbpFY

what is the order of the images? top left-top right and so on row by row?

A stitched version of the mosaic:

https://imgur.com/a/017Xo

Shadows seem to have changed at some places. So the stitching isn’t quite perfect everywhere.

Any stitching errors are mine.

Credit for the raw images: ESA/Rosetta/MPS for OSIRIS Team MPS/UPD/LAM/IAA/SSO/INTA/UPM/DASP/IDA

I don’t add any own copyright.

Nice work, Gerald. May I use this for my own additional info blog post on understanding the viewing perspective in this picture? (Which includes not even a whisper about stretch).

Hi A.Cooper,

Of course, whether for stretch, or not.

Just distinguish between the original Osiris images, my humble contribution, and your own work, such that readers are able to assign each one’s part.

Thanks, Gerald

So, that post will come out soon, just a bit of help for visualising this picture’s viewpoint. And I’ll take you up on your kind invitation to use it for stretch too because I’ll use that innocuous post as an input for another one apropos of something we’re already discussing elsewhere.

As you request, I shall of course differentiate between the contributions from OSIRIS, you and me.

And here it is. Better late than never:

https://scute1133site.wordpress.com/2016/10/10/part-66-the-final-approach-maat-02-mosaic/

Nice! I’d expect some changes of the depletions around the pits during perihelion. Did you find some?

Hi Gerald,

I am not expecting to find any trans-perihelion changes here at all. So much so that my main analysis would be to debunk any claims that there are. Any outgassing from this area has not recently remodelled the surface at all. The surface is devolatised, the outgassing is coming from deeper, and no “depletions” would result. Perhaps some slight chance of very minor rockfall, but unexpected as there were no nearby outbursts to cause “duck quakes”

Hi Marco,

showing, that there occurred no significant trans-perihelion changes here, would be an interesting result, too.

Hi Gerald,

Of course, demonstrating that there is no change is the opposite problem to that which I have argued ad infinitum with Ianw16. I am not sure at what scale the pictures can be definitive of, but I am sure we can rule out changes of more than 10m. We should be pretty sure of no changes of about 2m or so. Any smaller than that and there would be plenty of reasonable doubt either way, depending on the specific type of change hypothesised.

@ Gerald

Re. finding changes in the depletions. Ah, well that’s the subject of the next post, using these and other photos. It probably won’t be quite what you’d expect. I’ll link it here but it may be a few days. For the record, I’m not that concerned whether there are changes or not, having found two areas of subsidence elsewhere over perihelion 2015 but also leaning towards the hypothesis that these pits are very much in their swan song.

And Marco found subsidence at Anuket which you haven’t questioned (not the rock fall) so I’ll mention that as well for balance.

Hi Gerald,

I really like it when you share your image work! Pictures say a thousand words, and this one is fantastic as the original is virtually impossible to follow. Also with your morphing images that you linked:

It was instrumental in working out the discrepancy in peer reviewed published map of the same region, see:

https://marcoparigi.blogspot.com.au/2016/10/anuket-sobek-border-confusion.html

The change itself is visible and obvious from long distances, so many images, both NAVCAM and Osiris are available to cross check these regional borders. Again – even if you are not quite content with your finished product yourself, your image processing is very illuminating….

Hi Marco,

with your more than a year worth of practising with Rostta images, I’m rather confident, that you would be able to create your own stitches and morphs.

In the archives there is waiting a bonanza of images for you to be processed.

I can do this only occasionally for Rosetta. The late OSIRIS mosaic has been worth spending the time, since – as you say – it’s hard to get a consistent impression without doing so, and fully automated tools would likely have failed with these small thumbnails.

The morph has been a very incomplete draft, and can be done much better All you need is time, patience, and accuracy.

The animated gifs you created are a first step to make things easier to grasp.

I am not sure that you are right. After a year of looking at pre-perihelion photos of Anuket, I am familiar with every lump and bump. Having Navcam pictures stitched together has helped me become more familiar only because I could see the bigger picture and find my bearings at a glance rather than staring for hours.

Once a landscape has become familiar from many views from many angles it is like the mountain near my family home. I stare at the many rocks, ridges and forest, and I become more familiar over time at what they look like from different illumination at different times of the day and year. After flooding monsoon rains (January 11th 1998 – Night of Noah), I noticed a brown streak on one of the sloping forest sections. I realised it must have been a rockslide triggered by the flooding rains. Since it is part of a large Military reserve, no buildings or people were in that area, so it never made a mention in the news or in terms of the geology of the mountain. However, the scarp is still there and I could point it out to you even after 18+ years. Google maps show the area in 3D but there is no way even before and after Google earth satellite comparators would find it in the haystack of other optical differences.

In short our brain’s subconscious can do breathtaking 3D topographic maps that surpass any tools yet available. This is in fact a significant adaptive characteristic useful to hunter-gatherer societies that find their way through rugged (but familiar) terrain. The trick is using the part of the subconscious that recognises terrain and not that which attempts to make something like a face or a man made object out of terrain which is what pareidolia actually is.

Certainly once your subconscious 3D terrain modeller has found something objectively significant: – that which discovered it cannot be used to prove it objectively, so then you switch to looking for ways to falsify it objectively using the techniques you mention. eg. Can you use differences in lighting and parallax to get the same effect and so forth. That is the sort of thing that I’ve looked at meticulously in A.Cooper’s post that verifies the boulder triangle. Each possible illusion is looked at in turn, and each is ruled out as the cause and so are combinations of illusions.

What you have actually demonstrated usefully, is that far from doing that, you have generalised the properties of the difference we are noting and noted that illusions can and do look the scale and type of the change that we have found without being actual changes.

What we do for every possible change is look at every optical illusion that could be happening in the context of the images at hand, and rule them possible or impossible in turn until we either get certainty of change or reasonable doubt. We have only published those that we have come to a certainty of change with our criteria.

The physical environment for 67P/C-G is considerably different from Earth’s. Two major differences are gravity and liquid surface water.

Their persence on Earth tend to smooth Earth’s surface. On 67P these two factors are absent or almost absent.

This results in a very rugged surface on 67P at many locations. I’d even say, that 67P hasn’t a 2-dimensional surface, but a surface of fractal dimension between 2 and 3, closer to 3 than most of Earth’s surface.

This difference in surface structure can mislead our Earth-trained subconsciousness. To find these Earth-trained flaws, a stupid, but less prejudiced piece of software, can help.

Eventually the mix of apparent changes due to perspective, shadows, and actual topographic changes can be disentangled to a higher level of confidence, when working more formally.

Hi Gerald,

That is all well and good, but trust in stupid software leads to the following kind of error:

https://marcoparigi.blogspot.com.au/2016/10/anuket-sobek-border-confusion.html

Actual human familiarity with the areas concerned is paramount, even if just for cross checking.

Marco (and Gerald)

Re Marco’s linked blog post.

Agreed. This sort of mistake occurs if you use several pieces of, on the face of it, ‘clever’ software and then use humans to click slavishly along the borders generated without having any feel for whether something drastic has gone awry. You’re in danger of ending up with with anomalies that trickle down like a spreadsheet input mistake.

You need some sort of quality control from a human with good knowledge of the photos and a good 3D picture of where features are foreshortened or even curving away, out of sight. Marco is providing that surface with his blog post he’s linked above.

It’s not about relying slavishly on computers.

All the navigation ops would have been impossible to perform relying only on human intuition.

Humans make loads of random errors. Those are different from systematic flaws of “simple” software, as long as the software doesn’t mimick human behaviour.

Hence comparing automated results with”intiutive” results reduces the overall errors dramatically. That’s a fact, at least in the world I’m living in.

When I’m writing advanced software, it beats me almost always, usually by orders of magnitude, regarding speed and accuracy, on a basis of thousands of tests, which are as real as the display on which you’re reading this. Humans are inherently unreliable, when many easy jobs need to be performed in perfection. For a computer it’s straightforward to perform this kind of jobs flawlessly once the software is debugged.

So why should one reasonably refuse to use poweful tools when the result counts, and there are no ethical issues?

Btw. without “stupid” software we couldn’t even discuss here.

Hi Gerald,

I guess in the context of what you said in the last grand generalisations you mention – It is about relying on *software* to debug obviously buggy *software*.

With the finding of Philae, for instance, there is little point pinpointing a location to within a few metres on a *model* until the model is debugged *by humans*.

It took humans checking images, to recognise there was bugs in the software. Humans to recognise a shape was indeed Philae. Most importantly, it will take humans to write the papers that convince other humans that a computer is right or wrong in any particular case, and why.

With regards to the morphology and your claims that what we see is pareidolia, you are not convincing anyone.

I am saying that the automated software that is recognising 67P features, coordinates and measurements is particularly buggy and needs to be debugged by humans intimately familiar with the morphology of the comet.

Gerald

I don’t think caricaturing us as Luddites is going to wash. Clearly, we’re talking about the applicability of otherwise clever software to problems that haven’t been sufficiently well-characterised. Navigation ops are based on the extremely well-characterised physics of orbital dynamics. I’m sure the things you’re targeting for advanced programming are well-understood too.

When it comes to poorly understood processes the software often doesn’t capture the full complexity and if there are unknown unknowns, to coin a phrase, you’re in deep trouble- a wholly missed parameter that upsets everything. That’s where human intuition comes in. It either discovers the parameter before writing the software. Or it sees that the output is so absurd that there must be an undiscovered parameter. Or intuition fails, the computer says “yes” and we have to wait for Marco to say, “well, actually it’s a ‘no'”.

The example below appropriately involves using computers to fit the head to the body. It addresses your point but also happens to serve as a guide book for scientists when they eventually decide to have a go at fitting the head back onto the body. That’s something I’ve repeatedly suggested doing since January 2015, including a direct suggestion to the OSIRIS team on this blog exactly a year ago.

In the early days, you and Harvey were talking of using software to test the head rim/body shear line matches. That would supposedly avert any problems related to human bias. The hypothesised head lobe movement from the body is predominantly ‘vertical’ in upright duck mode, which is largely up the x axis of the comet so all the talk was of dropping the head lobe down onto the body and checking the extent to which the various matches deviated from each other (presumably after an initial purported match had been clamped together). However, the ‘vertical’ movement down the x axis is just one of the 12 degrees of freedom (3 translational axes and 3 rotational axes; 6 axes times 2 senses for each= 12 degrees of freedom).

We might include the other two translational axes, y and z timesed by two senses so as to capture all translational movements, set the programme running and see what we get. So we’d be guiding the head lobe down, aiming the initial head rim match at its target seating just like Soyuz docking with the ISS.

On first contact, the computer would immediately give a negative result for that match because only the the first-touch would match, a highly theoretical ‘point match’, which is non-significant until a one-dimensional line leading to another point can be matched. It then needs to match to a third point, i.e. matching an area, then to a fourth point, out-of-plane, to match a fractal volume (a bent area).

The reason the computer would give a negative result is that the rotation of the head lobe on rising from the body wouldn’t have been factored in. That requires the 6 rotational degrees of freedom. And indeed, the head is tipped forward (positive rotation about z) and possibly about x too. That’s why the “clever programme” that really is clever for, say, 3D printing (entirely 3-axis translational, no rotation) isn’t applicable to the problem at hand: a stretching comet. And a human, “slavishly clicking” around the two askew matches to disprove stretch will be labouring under a false assumption that he’s covered all bases.

Let’s say he suddenly realises the rotational axes need to be included and reruns the process with a rotation patch added. The computer will still say no. That’s because the head rim could reasonably be expected to have been initially resistant to being yanked away. It would have been yanked about prior to shear and be angled downwards (which it is). This latest model run would nevertheless be more accurate and the human would be able to see that the rim was sitting uncannily close to the shear line. But, being unaware of the possibility of angled head layers and of course, being a fallible human hopelessly prone to pareidolia, he would banish all such thoughts. He would trust the computer that had said “No” and start writing his stretch repudiation paper.

Let’s say a colleague came along in the nick of time and somehow modelled the tensile strength and ductility of the shear line perfectly (an impossible feat) and added that to the programme? That would characterise the yanked-about head rim. But the computer would still say no. The new patch would only work if the the two sections broke without crumbling. The inherent fractures (gaps whose tensile strength and ductility weren’t characterised by definition) contribute to the crumbling away of boulders and pebbles that fall and bounce randomly. In other words, they’re gouged out of the very match we’re trying to match up and end up somewhere else.

So let’s say our God-like modeller manages to model all fractures on all scales and even the multitudinous chaotic paths of boulders as they bounce down the Hapi cliff rim. And his programme picks them all up and slots them back into place on both sides of the match? Will the computer finally say yes? No it won’t, because it would be reasonable to assume that on shearing, there would be substantial outgassing or full-blown outbursts, casting tons of dust into space. But by now the head rim would be looking so closely fitting to the shear line that both scientists would really be wondering. But the dust emission means there’s an anomaly in the computer’s output and an anomaly means there’s no match.

So let’s say all the dust emissions get perfectly modelled, gathered up from deep space and packed back into the two supposedly matching surfaces. Will the the computer finally agree to the match that the two humans can see as blindingly obvious by now? No, because it would be reasonable to assume that just prior to shear, there were other layers delaminating away from the shear line just as the fibres on the surface of a rope break prematurely and recoil before full faliure. The weight of the recoiled layers before they recoiled would modify the tensile strength inputs for the head rim/shear line, above. That would mean that despite being perfectly modelled they were calculated erroneously as the free-standing, uncovered layers we see today. All owing to the imperfect knowledge of utterly random processes, in this case, the unknown phenomenon of layer sliding.

So let’s say the layer-sliding gets modelled perfectly too. Will that finally mean, with our five hastily added patches to date, that the computer will at last agree to the match? Not at all! We’ve been talking about the shear line and head rim as if they were set in aspic the moment the head detached. Why should the layer hosting the shear line stay put if the two layers above it recoiled when they gave up and tore prematurely? Why should the shear line layer hang around directly under the head rim just to satisfy the 5 patchy input parameters of an ill-thought-out software programme and save us the trouble of going to a sixth?

What I’m getting at here is the shear mindless randomness of natural physical processes that will defy our best efforts to characterise them unless we leave no stone unturned. Our best efforts to characterise them are absolutely synonymous with the parameters that we use to write our software. Therefore, the code used to run the programme is, in its entirety, a human judgement call. The language would be solid maths and physics, yes, but what physical phenomena are chosen and which are left out (i.e. not known) is the judgement call. In many cases it may well be correct, even very correct like orbital simulation programmes using GM/r^2 ad infinitum, and doubtless the programmes that you write and that beat you every time. But when the system is complex, our perceived input parameters and therefore our programmes become more and more precarious. That’s why we have to leave no stone unturned in characterising the parameters and that, in this day and age, still requires humans.

So yes, the sixth patch is that the shear line layer itself could reasonably have recoiled like the other layers above it and so be set just outside the reseated head rim. It would be concentric.

None of the six patches above is a made-up story. Each parameter from number 1, addition of rotation axes to number 6, recoil of the shear line layer has been laid out in detail with photographic evidence in the stretch blog. And it was a human who worked out those parameters, not a computer. All the computer does is reflect the human judgement call. And that’s why it’s wrong for humans to slavishly follow the output of a programme that they know is, at its root, a human construct which may be flawed.

I’m still turning over stones. But they’re getting smaller and smaller. If OSIRIS used the six parameters above, the fit would be very snug I’m sure.

Hi Marco and A.Cooper,

it appears you’re hugely underestimating the capabilities of today’s software systems, and even the capabilities of computers available when Rosetta was designed.

The star tracker finds the few stars within all the dust. So there exist formal methods to identify matches within overwhelmingly much noise, and where a human being has no realistic chance to see anything but noise or spurious patterns..

If you have available a photograph of stars, you may try on your own with a related scenario, e.g. here

https://nova.astrometry.net/upload

and compare your own capabilities by trying to find the match in a sky map like this one:

https://www.google.com/sky/

Regarding degrees of freedom: There are only 6 degrees of freedom for a rigid body seen from another rigid body in 3d Euklidean space. The observer cancels out.

A. Cooper, in your extended example I’d suggest better to rely on the software, which says that stretch doesn’t happen the way you suppose, if it happens at all.

Marco, how could Rosetta’s touchdown be predicted hours in advance within a nontrivial field of gravity with an accuracy of about a minute, if there would be significant flaws in ESA’s model of the comet? If you ever would have done this kind of calculations yourself, you would know, how extremely sensitive trajectories are with respect to errors in the model.

If you would be right with the scientists not knowing what they do, particularly with respect to computation, all the flight dynamics and planning would have failed.

And no, it’s not just Gm/r. You need a correct shape model, a model of the mass distribution, estimated outgassing etc. Any significant incorrect assumptions would have shown up immediately.

Planning three days in advance down to less than a minute in such a chaotic environment requires a much more resilent software environment than you appear to be aware. Due to the light travel times, the software needs to make decisions. There is no human intuition for last-minute corrections. The mere success of the Rosetta mission proves the reliability of the models and the correctness of the software implementation.

Gerald

Yeah…I forgot about the outgassing, so I would’ve slavishly believed the output of the bazillion GM/r^2 calcs the computer does so impeccably and sent Rosetta on her way. Thank goodness for the humans who realised that 🙂

As for the shape model, I was considering the bilobed gravity field as being baked in, insofar as it’s been quantified. And as I’m sure you know after reading my posts so assiduously from start to finish, I nailed that one to the floor, right down to the 7 km lower limit radius before gravitational anomalies take real effect. That was just as was stated by DLR in the landing post. I worked that out on paper so I knew exactly why they took hold at that radius rather than just noticing the programme noticing it. The programme would tell me nothing about the degree to which different aspects of the rotating shape were having which effect and so I’d just be slavishly bearing in mind a phenomenon (sub 7 km perturbations) without knowing how I might minimise them as I went lower.

My non-computer method showed me that using rotation plane approach or even the plane that resides between the rotation plane and the symmetry plane would minimise the perturbations. That may be intuitive once you see the symmetry plane of the comet is almost aligned with the rotation plane but there are still two competing phenomena that vie to throw Rosetta off. They’re baked into the GM/r^2 programme but the readout won’t differentiate between the two. You have to have the prior knowledge of the two phenomena before you can add a patch that will separate the two in the readout.

Strictly speaking, the two phenomena are recorded in terms of in-plane and out-of-plane anomalies. But again, if you just read that off after 2,3 or 5 hours of close approach, it won’t tell you where, when or why it happened. You need the patch for that.

The two phenomena are in Part 58 the preparatory post for the Apis flyby post, Part 59, so I won’t go into them here. They’re part of the reason that Apis was far and away the star candidate for a flyby. But the computer wouldn’t have told you that.

Don’t get me wrong about computers, they’re stunning in their usefulness once all the parameters are constrained. However, using brute force computing power in endless Monte Carlo runs instead of having a feel for the phenomena at play is a recipe for dulling the senses. I see it all the time in my NEO work. Astronomers relying on the orbital software and ephemerides, recording the various readouts, eg a particular close approach flyby, and having not an ounce of understanding as to how the heliocentric orbit relates to the geocentric approach radiant- even if they’re physically looking at both on the screen. This understanding is crucial to planetary protection and there are gaps in the coverage due to not understanding these radiants.

Only once I’ve done all the paper calcs and visualised it do I send it off to a wizard orbital software programmer who runs it, refines it and verifies my initial hypothesis. And his readouts are amazingly accurate and informative so I’m well positioned not to be “underestimating today’s software systems”. You and I are in agreement regarding their amazing capacity for accuracy once all parameters have been incorporated.

All I’m saying is that the number and essence of those parameters is sometimes difficult to quantify. Some are missed completely, some are badly quantified. Some are even baked into the software without being visible or known to the operators, nor even known to the coders themselves- they’re unexpected emergent phenomena which the computer can only represent but not explain eg the in-plane and out-of-plane anomalies for 67P and the similar radiants for certain classes of NEO that constitute the coverage gap.

Hi A.Cooper,

only a short reply today: It’s possible to write software which inferes model parameters from the data. You can calculate in both directions, where the inference methods are computationally more complex for the same class of problems.

These inference methods usually perfrom much better than humans, too. But sometimes they need some help by providing them a crude initial estimate to find the best solution.

You can combine methods, some for generating crude guesses, and those finding the local optimum.

But usually it’s best to combine the strengthes of humans and computers, since both have their strengthes and weaknesses.

Hi Gerald

Re software inferring model parameters.

I think we’re gravitating towards something of a consensus. No doubt you’re right that computers can be programmed to infer model parameters from the data. However they may find one parameter only to see it come and go in the output data for the want of understanding another parameter. That’s where your suggested combining of the strengths of humans and computers comes into play. And that’s what I’ve really been saying but expressing it in the negative: the limitations of computers and the failings of humans to notice those limitations. You’ve turned it on its head, expressing it in the positive, which is a useful development.

For my so-called sounding orbits in the rotation plane, used for characterising the gravitational anomalies, your inference programme may well have noticed a relationship of r^-2.5 (orbit radius to the power of minus 2.5). This would be the ghostly signature of the 1/r^2 radius variation and Rosetta’s instantaneous velocity combined. If the computer threw that up, it might even do a massive crunching of all the fundamental units of all the equations involved and infer the velocity equation and the inverse square law working in concert. Then again, it might just throw up the r^-2.5 exponent and we’d have to go away and work it out for ourselves.

Even if it crunched the units and got the v equation and inverse square law, it would only see it occurring as such for circular orbits above 7km. As soon as the orbit went from circular to elliptical, which it did for Rosetta at lower orbits, the relationship would smudge slightly (if slightly elliptical, say e= 0.1) and then all but disappear (very elliptical, say e= 0.5). The velocity/inverse square relationship would still be there but it would operated on by the eccentricity and, specifically, the angle the orbit tangent made to a circle tangent at that same instantaneous r value point along the orbit. But the computer wouldn’t know that at first and have to infer it as you say. So it would have to get from the state of not understanding why its beautiful r^-2.5 relationship was smudging to the point of knowing what was smudging it. To do this, it would first of all need to have a record of the eccentricity of each orbit and the true anomaly (instantaneous position) at every point at which it was taking data. Only then could it do the crunching actually to infer the eccentricity relationship. Of course, it’s possible for it to be programmed with eccentricity and true anomaly info as well (or to cross-reference it if it’s combining orbital elements with GM/r^2 perturbations in the same programme) but humans need to think of teasing it in the right direction by thinking of all these things in advance. But if it’s blindly crunching its way round the orbit doing mindless GM/r^2 sums, it can rely on each previous instantaneous state vector for the next input and eccentricity hardly ever gets a look-in. Even in that case, the inference programme might see that the r^-2.5 relationship magically becomes crystal clear at every periapsis and apoapsis (where the circle tangent = the orbit tangent) then fades in between. It might “get it” from that relationship and divine the true anomaly relationship from there and thence to eccentricity, but I’m not sure I’d bank on it.

Then this combined parameter setup would start smudging below 7km because out-of-plane anomalies would start making themselves felt. That depends on the sin of the angle that of all the little mass packet centres of gravity subtend with Rosetta and the centre of gravity. Then they’re balanced either side of the orbital plane to get the net sideways tug. Then there’s their varying distances…etc. etc.

I’m sure humans will be of less and less importance as these methods are improved and computers can be programmed to think of these eventualities like we do.

Hi A.Cooper,

with today’s software you can infer the mass distribution within a body from the Doppler velocity measurements of an orbiting probe, better two probes.

As a completed example, see our Moon’s gravitiy map obtained by the GRAIL mission:

https://www.nasa.gov/mission_pages/grail/news/grail20121205.html

A similiar method is currently applied to Jupiter, in order to find out, whether it has a solid core.

67P is a little more complex, since you need to consider the drag by outgassing.

Today you can solve equation systems with billions of degrees of freedom:

“We solve a heterogeneous nonlinear hyperelasticity problem discretized using piecewise quadratic finite elements with a total of 42 billion degrees of freedom in about six minutes”

Source: https://www.mathe.tu-freiberg.de/files/personal/253/sisc-99790.pdf

No way for human intuition to compete.

Of course, you need to feed the computer with an appropriate approach. This is, where humans are still needed to solve complex problems, Writing such software is not always easy.

Hi Gerald,

I think we may have strayed a little from the point at hand.

With regards to images in general and with the computer aided techniques that you mention, there is little relation between the ability of a model and computers to get to take the picture, and to correctly interpret different pictures taken at different times, in different lighting to work out what are changes, what are mirages, to work out parameters regarding surface changes. These parameters are then fed back into the model.

In the long run humans are the ones that write the papers to convince other humans of what changes are taking place, whether maps are right or wrong, the exact dimensions of the comet and the trend of changes over one perihelion to many.

Even with papers having passed peer review, there are question marks over these things, that mere humans are going to have to sort through and settle.

There are question marks about:

1. The latest maps of the regions.

2. Trans perihelion changes – Both those submitted to the call for contributions and some of the tentative ones on peer reviewed papers.

3. Why the initial CONSERT location ellipse for Philae did not include Philae’s proven location.

4. The exact dimensions of the comet including neck length and width before and after perihelion

5. The standard of proof for something considered impossible before Rosetta being proven (eg. O2, stretch, life)

In the grand scheme of things, these points will be dealt with gradually over time, with a great deal of thoroughness, both with human brains and computer aided processes.

Arguing the toss over whether the human brain is superior in one tiny area of topographical change recognition of comet nucleiis missing the point that the science will eventually get there and prove it one way or another. Of course I think I am right and have the confidence of conviction to publish, in blog form. Are you convinced enough that I am wrong to publish in the same or equivalent way?

Hi Marco,

I’ve neither the time nor do I see the need to intervene the work of the Rosetta scientists.

They are doing an excellent job, as far as I can see, and as far as I’ve occasionally verified.

Hi Gerald,

You probably haven’t read this thread then:

https://blogs.esa.int/rosetta/2016/09/29/comet-landscapes/#comment-605826

Rosetta scientists are busy and have multiple responsibilities. Peer review did not even come close to finding these errors. Errors are astonishingly easy to make, but can easily cascade into permanent bad science in future textbooks. This is because peer reviewed science doesn’t generally have a secondary review once published.

It is up to us. People like you and me that are obsessed with what is right and wrong and looking from the outside in.

Hi Marco,

relevant errors are usually corrected in later publications, sometimes in special “errata” papers, or by other researchers. This applies to the data reduction in the NASA PDS and ESA PSA, as well. Sometimes it takes years, until the issues with the instrument data are fixed.

Occasionally I find such errors in the data, and if relevant, I notify it to the instrument scientists via the responsible staff member. It’s then fixed in the next release, unsually within a few months.

It’s only recommended to notify errors, if the instrument scientists aren’t working on the respective issue anyway.

And sometimes I have known inaccuracies in my own products. But you need to release at some point, since rarely something is absolutely perfect. Science and technology would significantly slow down, if all issues would be resolved before release. You can expect loud protest, when results or data are held back indefinitely, until each perceivable quality test is designed and performed. Just remember the complaints caused by the delayed release of the OSIRIS images.

… and you are right, I hadn’t yet read the discussion in the thread you mentioned. I’m busy with not making too many errors myself. This results in some delay regarding reading articles or comments.

Eventually your obsessive inspection of 67P images pays. Congratulations!

Hi Gerald

It’s heartening to see your congratulatory comment to Marco.

However, I wouldn’t say obsessive, assiduous, yes. I know Marco has used ‘obsessive’ in the past so you may be echoing that so I can hardly blame you. But I do think you’re both wrong. Obsessive might be used to describe people who make lists of their LP’s or know the back story of every Star Trek character but not for people who are earnestly scrutinising a comet to the level of detail necessary to decipher it and inform the theories regarding solar system formation. It could hardly be more important work with far-reaching implications, not least of all understanding the formation of the Earth and evolution of life itself (paraphrasing a Rosetta mission statement).

Obsessive versus assiduous is an important distinction because it crops up where people refer to the time involved or the number of words or pages involved in explaining it all. It might seem obsessive to some but there are just as many words, pages, pictures and hours of scrutiny as is required to decipher the comet at the fine level of resolution that only we seem to do. Seeing as the comet won’t be deciphered properly unless it’s done at this level of detail, referring to Marco’s work as obsessive plays into the hands of these critics. The flip side of their criticism is that the level of detail they’re going to is sufficient. It clearly isn’t, as the points below show.

Regarding Marco’s long hours of scrutiny of Anuket. He told me in early 2015 that he expected to see changes on the Anuket neck over perihelion. I voiced my doubts to him on several occasions but looked on with deep interest as he investigated the before/after photos over many months from late 2015 onwards.

I already knew every nook and cranny on the neck so it was easy to make a judgement on his findings even if it required knowledge of a sideways view (3D appraisal).

After months of assiduous observation, there were a few tentative candidates but nothing concrete. If Marco had given up at that point, all that work would’ve been fruitless. But he carried on and found three changes in quick succession: the moving boulder triad, another triad that’s blogged but barely had an airing here, and the overhang collapses or rock falls.

So at that point, the amount of work done in observing the Anuket neck for changes was precisely that required to notice the changes, no more, no less. I don’t call that obsessive, I call it the right amount of work to do good science. I’m sure the 50 to 60 authors of each OSIRIS paper think or hope they also do the requisite amount of work to do good science and we don’t call them obsessive.

I sometimes research one feature (eg Ma’at 02, Deir El Medina) for as much as 30 hours. Over a period of many months, that is, but perhaps for 5 to 10 hours for one post so as to get the dotted annotations right. And when gearing up for dotting, it’s a point where everything from past analysis crystallises as I drop down to the finest possible level of resolution and detail. It might seem obsessive to some, but it is absolutely what’s required to decipher what’s going on. Marco and I are consistently looking at the comet at the 5-15 metre scale, zooming back out for an overview check, then zooming right back in to the adjacent area. That’s simply the required method to understand what’s going on. You could spend ten times longer without zooming in and out repeatedly like this and you’d never fully understand it. This will become abundantly clear in my next post which is about Ma’at 02 and the rather lax analysis of supposed changes in the supposed granular flows.

And is our scrutiny of 67P really any more obsessive that the accumulated input of hours and hours by, for instance, the dozens of authors on the Massironi et al. 2015 paper that concluded the comet was a contact binary? They couldn’t have presented that work without putting in all those hours. The data analysis was impeccable, very useful and absolutely required many hours of assiduous, not obsessive, work. It got them so far, all good data in the bag. But without the zooming in and out, it’s just the conclusion that’s wrong (in my and Marco’s view) and all their data support stretch. They must have spent at least an order of magnitude more time in accumulated hours on analysing the lobes. But we don’t call that obsessive. So why on Earth would we call two people spending a tenth of the time and getting results, “obsessive”.

If Marco’s hours of assiduous photo analysis produce amazing scientific results, those hours are simply ordinary, everyday work, just like the many hours of assiduous work required to design, build and send Rosetta in the first place.

Now his work has resulted in an important erratum in an OSIRIS paper, one which will avert the possibility of future papers contradicting each other as to which region particular jets emanate from and other such confusions. I’ve added two more corrections to the erratum. That’s purely because of the many hours I’ve put into scrutinising the comet for more important reasons and findings than correcting maps (although I’m delighted to have been of some help in the mapping).

Now Marco’s rock fall is gaining attention (the one you and ianw16 disputed). Again, that was discovered as a result of analysis at the 5-15 metre scale. Furthermore, I’ve related the Vincent et al 2016 outbursts numbered 27 and 31 to that rock fall and to the collapse identification next door to it. That’s the first evidence of erosion being directly related to outbursts actually during the Rosetta mission i.e. not supposition. It’s circumstantial evidence, not conclusive but the first evidence of any sort. It’s all as a direct result of scrutiny across large areas but down to the 5-15 metre level of resolution. Without that level of scrutiny for all those hours, none of this would have been discovered.

All Marco has done is spend the required amount of hours at the required level of detail to obtain the required results. There’s absolutely nothing obsessive about that.

Hi Gerald,

I think it would be far better for you to trust your own brain at some point. Computers and software work best s extensions to the brain’s function, not as replacements for it.

In terms of what is modellable and what is not, we certainly cannot easily model a biological cell even though we know it doesn’t break any laws of physics. Similarly with any sufficiently complex and Unknown “black box”. We cannot pretend to be able to model a biological cell until we see inside it for what it does. Similarly with a comet – We can only assume that phenomena obey the laws of physics. We shouldn’t feel we have to model it with a computer to get a sense of what is happening. A cycle of hypothesis and tests is not something that computers have been able to replace humans with, yet.

Another observation on Anuket regarding the timing of the collapse:

https://marcoparigi.blogspot.com.au/2016/10/timing-for-anuket-sah-collapse-not.html

Hi Marco, I’m still sorry about your presumed cliff collapse, As interesting as it would be, all I see is a an obvious change of perspective and illumination. This doesn’t rule out some change. But i simply don’t see any evidence. All features down to the resolution of the image I compared appear to be unchanged. I’ve compared a few dozens of features, and didn’t even find a single moving boulder. (I’ve compared 128010627 with 97265018)

Re obsessive: I’m working like that, too, in many cases. Sometimes that’s required to be successful. So I know what you are talking of. After some time, you can go back to “normal” mode, and refill your power for the next (ob)session or flow. The negative touch with this word is only for people who never experience it (from inside). For those who know it, it’s the highest degree of luck.

… regarding trusting my own brain. I’m comparing the performance of my own brain with that of computer software since decades professionally, usually many times a day. So I know very exactly, that I cannot trust my brain, nor that of other people. Therefore I know to the heck, that the enimy of being correct is presuming to be correct. And despite knowing all this, my brain still makes errors, and I cross-check everything of vital importance with formal methods. It would otherwise be entirely impossible to achieve anything useful in my job, or worse it could easily cause huge damage. Human intuition has turned out to be wrong in almost each case. It’s extremely hard to find out “the truth”, or better some truth, and overcome the fata morganas the human brain is producing all day long.

Hi Marco,

sorry, compared the wrong images, and thus can only confirm your assessment, that there haven’t occurred significant changes between the images you titled as January 2015 and December 2014.

I didn’t yet make the other comparisons, but need to continue doing something else, now.

Hi Gerald,

I think you must be saying that Ramy also is seeing something that could be a change but is probably an illusion?

The map errors indicate a systemic bug or error in the coordinate system that matches images with the model. This is likely to be human error, but we can’t rule out computer aided error.

I certainly have sidestepped this with my “obsessive” analysis of Anuket on my own without relying on software untested on comet surfaces. If the modelling system is suspect in some hard to define way, it was up to the people who were NOT relying on the model to point out errors the model didn’t pick up in terms of self consistency. That’s us.

Regarding the “enemy of being correct” : I think you presume to be correct in your analysis that my simple observation of change is incorrect.

What formal methods have you used to show that your presumption (in this case the presumption that I am incorrect) is indeed correct?

I think you are instead using formal methods that are inconclusive to confirm your own presumption which is incredibly strongly held, that there should be no change where I’ve found change.

Hi Marco,

the flaws in the map look more like human errors to me than a typical software error, but those who made the map know better.

You’re presuming too much about what I’m doing or not doing.

I’m simply too involved into other projects to have the time to check all your claims.

Cliff collapses are nothing strange or unexpected. Interesting is the relation to outbursts.

If I would seriously try to find the changes on 67P, I would write a software which finds all the changes, and also the cases of doubt which may or may not be changes on the basis of the available data.

But like for others my day has only 24 hours. Sofar I’m still restricted to this constraint, and I require the time for activities which I’m priorizing higher.

When doing investigations I can only recommend to cross-check with computer software, since it usually makes errors very different from human errors, and so the likelihood to find errors is improved considerably. It’s up to you to follow the recommendation or let it be.

This approach is working for me in almost any case, where flawless work is required. If you refuse to do cross-checking with formal methods or according software implementations, I can’t help. To me, that’s like trying to smell an image, instead of looking at it, i.e. refusing senses which would work better.

Hi Gerald,

It’s ok that you still don’t believe me regarding the cliff collapse. I have Ramy’s attention at least on this blog – Where he says about precisely the same scarp you are consistently denying:

“You are also spot-on with your conclusion about changes in the scarp. It is not very clear in the images but i believe there has been indeed a cliff collapse there. So thank you very much for your deilgent efforts!”

I am presuming you might defer to his authority?

May I suggest that it is a weakness of your own brain to presume that a computer model will be better than a human all the time. After all a computer will always defer to human instruction, while humans may not defer to a computer aided result.

I do fear the possibility of a future “new age of stupid” where all important technical knowledge is stored only in computers and robots and we just have to take their word that they are right.

Hi Marco

I didn’t model your presumed cliff collapse accurately in a software, nor did I perform a formal “manual” investigation.

When Ramy has done the verification, that’s ok for me.

I’m very aware of the weaknesses of my own brain. And I know, that my brain is nevertheless astonishingly able to create computer models which beat my own brain and that of others by orders of magnitude.

Computers don’t defer. They just execute the instructions they find, wherever they come from. It’s only wishful thinking, that they defer to human instructions. Many of today’s computer systems are too complex as that any human would be able to remotley understand what they are doing.

As a user of a computer you see only the systems which underwent some quality control. When you are working in development you work mostly with systems which don’t work as intended. Once they work about as desired, your job as a developer or engineer is done, and you work on the next flawed or incomplete system.

I think, we are already living in your “new age of stupid” in a double sense. People google instead of think, where it wouldn’t be necessary to google. And computers are actually much better than humans in many cases. Another problem I see is relying on opinions and intuition without checking the facts or even performing formal cross checking. In science you usually disbelieve, until sufficient evidence is available to convince you. You do not work in a way that you believe something unevidenced, until it is disproven.

I sincerely believe the “new age of stupid” has hit the sciences in a pernicious way already also.

To imagine what I am talking about – obviously this doesn’t happen – If someone extended your thinking regarding a programmed computer being more accurate to pass the captcha tests. If we don’t know the right answer or even understand the question and we are just writing a paper about it, we will believe whatever an automated system would say about it, because where we can test it in a controlled situation, the computer will always win. We would be loth to believe an outsider who claims to understand the question and knows the fundamental flaw in the computer’s analysis.

The question in this case is of how the nucleus surface is evolving and when, using images tuned to serve humans looking for interesting things in the images. If you think of a modified recapcha test on how things are changing from image to image of the comet, I am sure that humans will prove to be better than robots.

Hi Marco,

regarding your second paragraph: In stock exchange we have this situation since 10 years, at least. Transactions are made with microsecond steps. Traders try to get their computers as close as possible to the stock exchange to reduce signal travel times. Decisions are made fully automatically in tiny fractions of a second. As far as I know, this has been slowed down artificially in the meanwhile, that someone can plug out the cable, before a crash happens within milliseconds.

At CERN, about 100 petabytes of particle collision data are stored.

https://home.cern/about/updates/2013/02/cern-data-centre-passes-100-petabytes

No human can screen these data manually.

You need to rely on computers to have any chance to find particles like the Higgs boson.

Similar software hasn’t (likely yet) been applied to the surface changes of 67P. But I’d think, that’s mostly due to lack of funding, not because it couldn’t be done.

A few years ago I did some experiments with software which has been so complex, that I didn’t understand the questions nor the answers. Such things are well feasible. Those questions made sense, but they were far beyond my (biological) intellectual capabilities. All I was able, has been developing a technique to create those questions, not to really understand them, simply by complexity. Eventually I was able to create questions, the answer has been beyond the possibilities on a PC. But this has been far beyond the limits of any human I’m knowing of.

If you think at autonomous space exploration beyond direct accessibility from Earth, you’ll need systems which are able to pose complex questions on the basis of unforeseen observations, and perform according exploration.

The difficulty in my opinion is finding a solution which is powerful enough to make reasonable autonomous decisions, and to stay under human control in near-Earth situations.

Hi Gerald,

Your stock exchange example s simply a race condition, more to do with game theory than what I was talking about – Powerful computers are useless for robot recognition of recaptcha images, because of a lack of a formal process that replaces our intuition on some aspects, like recognising things from blurry images.

A. Cooper and I have been going through many 67P papers and finding serious errors related to this lack of intuition to recognise that basic premises are wrong enough to come to spurious conclusions.

With several of these, a formal approach can demonstrate these errata, and in these cases we are preparing emails to lead authors to alert them before the incorrect conclusions are taken as fact for future research.

Hi Marco,

blurry isn’t the problem for computer vision in the captchas. It’s mostly the large knowledge database you would need to find the underlying rules.

If you remember IBM’s Watson beating the best “Jeopardy” candidates, that’s not a fundamental issue, just a matter of funding / computational power and database.

Satellites surveillence systems do this kind of computer vision jobs since decades. But for obvious reasons I can’t tell you how and to which degree they do it. All I can say, you would be astonished, at least (based on published information, but not free as far as I know). But sometimes they are wrong.

In 1983 we (humankind) had a very serious incident due to a false positive:

https://en.wikipedia.org/wiki/1983_Soviet_nuclear_false_alarm_incident

This shows, that it’s better to cross check human results with software results, and vice versa, if feasible.

The trading software needs to interprete prices and news. If you ever tried to write such a software you would know, that it’s not straightforward. You need to implement the best trading strategy in the market. The trading computers are so smart in the meanwhile, that you can barely win as a human.

Sending emails to lead authors is certainly a way to get in touch with scientists, as long as it’s not too obtrusive. In cases, where the flaws are real and reproducible, they may even be grateful. But take into account, that they usually are very busy, and may not always respond as you are hoping.

Be also aware, that they can’t go too deep into speculations in the papers. And there are other restrictions to get an article published, depending on the journal.

We really have no speculations that we mention. The errors we have found thus far – Inconsistent border placements for regions (emailed and verified as errata) Purported changes at Deir el Medina that are a result of lighting and angle effect illusions (emailed to authors) and error in scaling RA/Dec as a cause of not being able to narrow down precession parameters (emailed to authors) are quite clear cut and the explanations are not speculative at all – But the insight of the matter at hand (eg. insight on what areas look like from different angles, and on what illusions are and what changes are likely, and insight into RA/Dec coordinate scaling) is what alerted us to the errors, and time spent proving that these were in fact errors on peer reviewed literature, was time well spent. It is astounding to me that papers with such errors passed peer review, but at the same time, it is the lack of insight that is the root cause, not the methodology, maths physics or data being used.

Hi Marco,

so thanks for you time spent for the review!

Your different approach may focus you on aspects others didn’t notice, even if it has always obviously been there.

Yes, flaws happen. I’m currently reviewing a paper myself, and so I can understand, that glitches can go unnoticed by the reviewers as by the authors. With each revision you find something you didn’t notice before.

And not everything is perfectly uniquie. So it might be interpreted differently by different authors or reviewers.

But usually the review process is / should be rather tight, so that most flaws are detected before publishing.

Hi Marco,

you may like to have a look at the Wikipedia articles about object or pattern recognition:

https://en.wikipedia.org/wiki/Outline_of_object_recognition

https://en.wikipedia.org/wiki/Pattern_recognition

Hi Gerald, Don’t forget to put in a last comment in the reflections blog entry…