Unlike virtual reality (VR), which immerses its user in a digital world, AR enhances a user’s existing environment by adding digital elements. One popular example is Pokémon GO, but the ability to enhance the real-world environment through AR is also being used in medical training, tourism, design and modelling and more.

Seizing on this potential, Paul’s aim is to develop an AR procedure viewer that projects information onto an area in front of the user, guiding them through tasks such as setting up a tool or instrument like a rover. This could be used to support existing training or assist astronauts in a completely new situation.

When astronauts perform operations on the International Space Station, they are in constant contact with communications teams on the ground. These specialist communicators, known at ESA as Eurocoms, work with experts to answer any questions and guide them through the required procedures.

When astronauts travel farther from Earth, they may not have the same ‘real-time’ access to remote support as on the Space Station. “An AR procedure viewer would provide that extra level of guidance or clarification needed to act independently,” Paul explains.

Paul wearing and operating the HoloLens. ESA-T. Weyerhaeuser

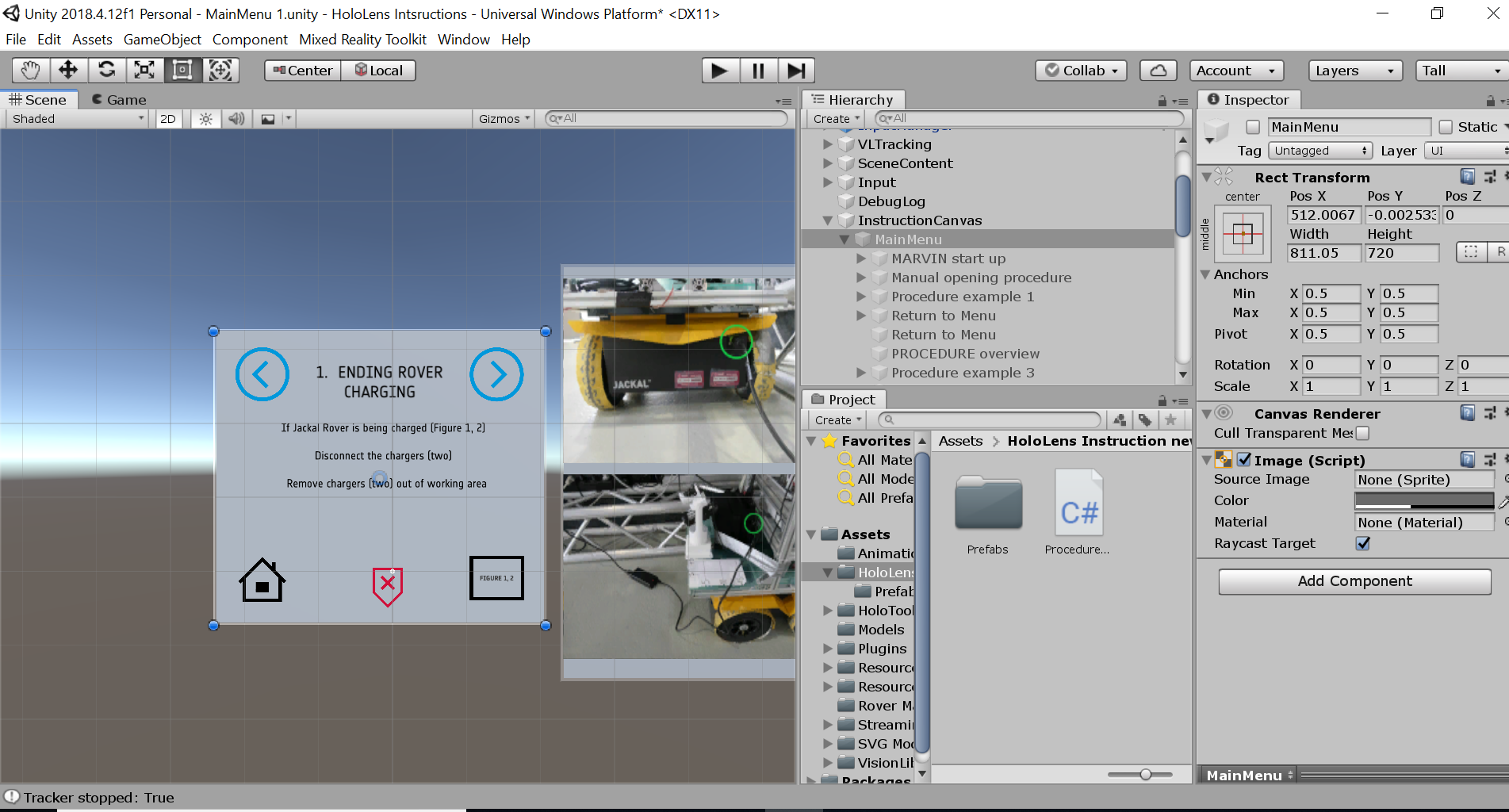

With a background in design, coding and software architecture, Paul has developed the viewer to a point where the user can see two different kinds of information at the same time. This is displayed on the inside of a HoloLens headset, worn like regular glasses.

First, the HoloLens shows a free-floating window with instructions positioned next to the object the user needs to operate – guiding them step-by-step through the necessary procedure.

HoloLens showing virtual instructions to operate a rover. ESA-P. Njayou

It also projects 3D information directly onto the area to be operated. One example is a 3D-modelled cable overlaid on top of a real cable on a rover. This way the user can firstly understand what needs to be done and then precisely locate the actual working area.

HoloLens projecting a 3D-modelled yellow cable overlaid on top of a real cable on the rover. ESA-P. Njayou

Paul says one of his main challenges was coding the programme so users could use verbal commands or press ‘buttons’ in the air to control the HoloLens viewer. “Optimising object recognition was also quite complex,” he adds.

With the help of special software, he was able to programme the device to detect the relevant object to be worked on. He then created a test case in which users can work through a procedure to make a rover ready for space.

Building an overlaying 3D-object and testing the code for the scenario later shown by the HoloLens. ESA-P. Njayou

Paul says cooperating and exchanging ideas with colleagues and team members working in the same, or different fields at EAC, helped him develop and improve interactions and enhance the interface to make it more user-friendly.

So, what’s next for AR and the procedure viewer? “Beyond improvements to systems like HoloLens 2, I believe AR will become crucial for deep space exploration where astronauts will encounter many unknown situations,” Paul says. “There is also opportunity for it to become more personalised, so assistance and guidance is given dependent on a user’s skills and I see something like the AR procedure viewer playing a key role in supporting future tasks in deep space.”

With so much potential in development and application, Paul looks forward to the future. “With the help of AR, all the courageous explorers of deep space could be empowered to explore and discover more as we take our next steps beyond Earth.”

Find out more about Spaceship here.