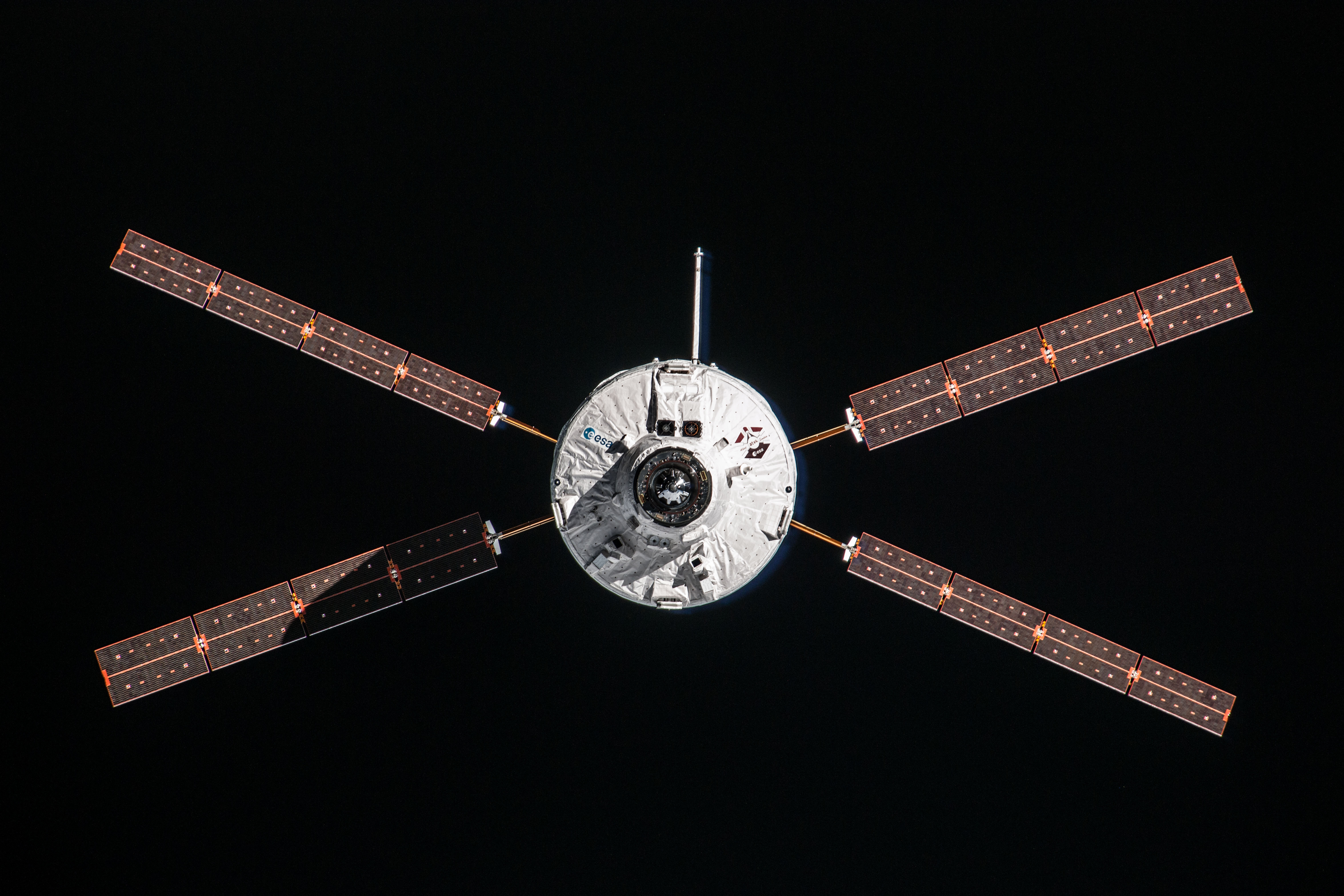

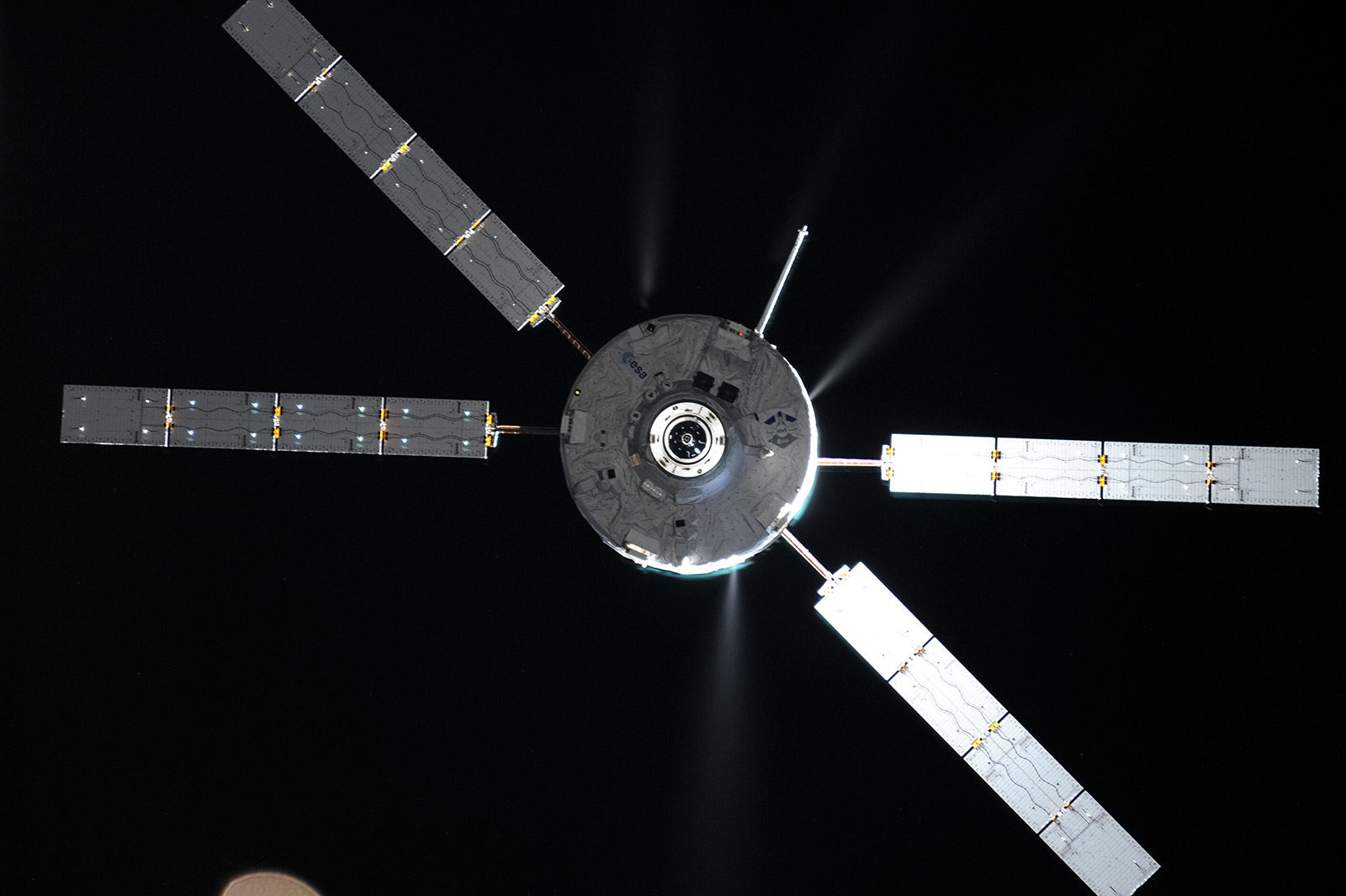

This week the first images from the LIRIS technology demonstration were published on the ESA website. LIRIS is demonstrating technology that could be used for docking with uncooperative targets by scanning space objects in infrared and lidar. For its first outing in space it was place on ATV-5 to scan the International Space Station. The results are some incredible views of the weightless research centre.

Some people on Twitter were asking why the resolution of the images are so low. We asked Olivier Mongrard, project engineer for LIRIS at ESA to explain:

The image resolution should be put in the context of a demonstration for Guidance Navigation and Control. The sensors were selected for navigating spacecraft and not for Public Relations!

Infrared detectors typically deliver smaller resolution images than found on normal camera cell phones today, but for vision-based navigation, the resolution on the Space Station image is sufficient at 70 m distance to navigate adequately.

As we get closer to the target, the resolution is enough to make out even small features and the accuracy reached based on these images will only increase as we start exploiting the data LIRIS has returned.

Keep in mind that we want to extract navigation-relevant information on spacecraft autonomously, using the spacecraft’s onboard computers. One of the challenges of vision-based navigation is to extract data without demanding too much in terms of computing power. So for our purposes too much resolution can be detrimental as it would require more computers.

For some of the vision-based navigation that Rosetta is performing around comet 67P, analysis of the images is done on Earth with powerful machines, for LIRIS the objective is that the spacecraft itself do all computations in real-time…

Automated Transfer Vehicle page

Automated Transfer Vehicle page ATV blog archive

ATV blog archive

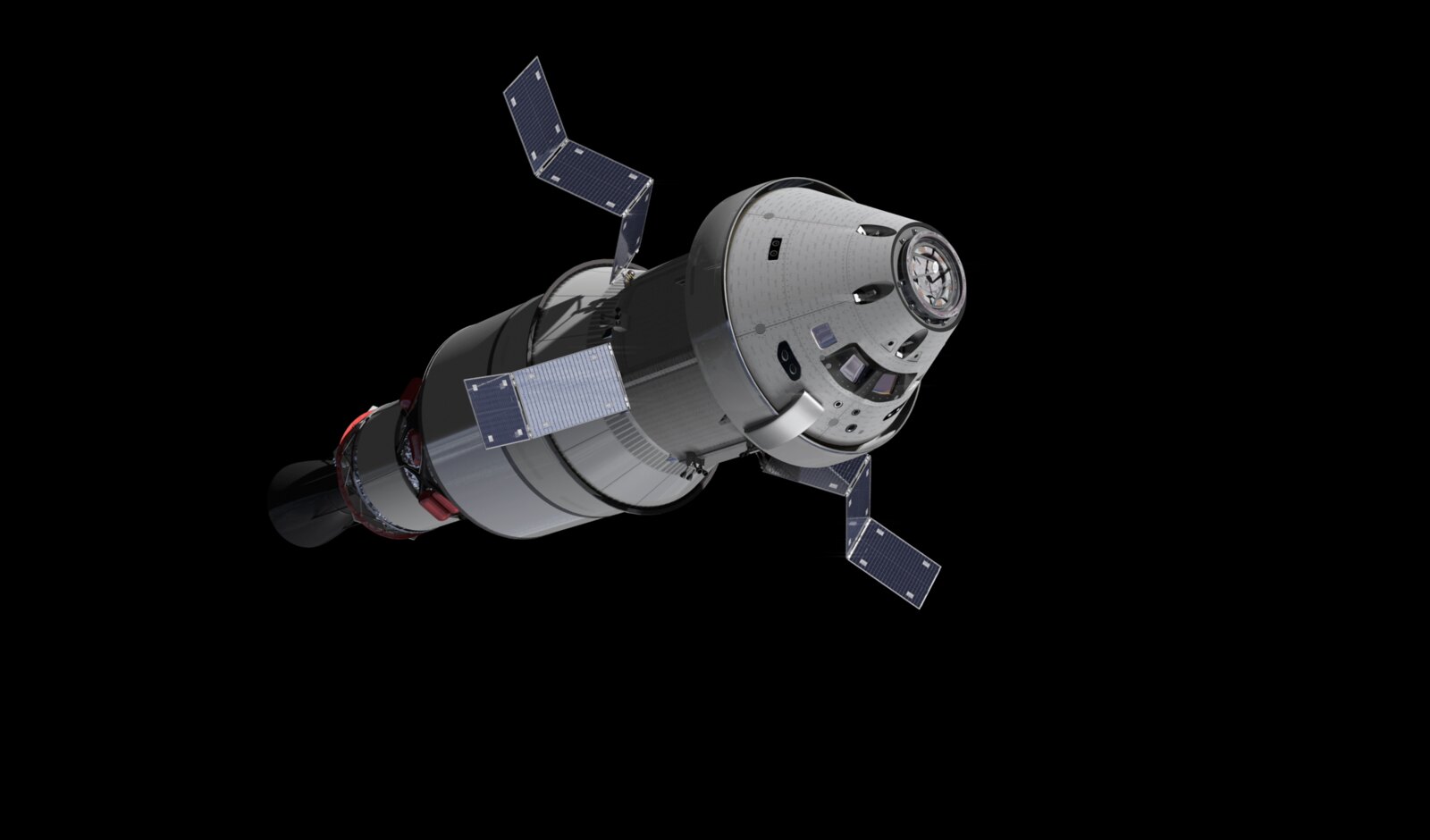

NASA Orion page

NASA Orion page NASA Artemis

NASA Artemis Airbus Orion page

Airbus Orion page

Discussion: no comments